AI instruments are producing convincing incorrect information. Attractive with them ability being on excessive alert

AI instruments can lend a hand us create content material, be told concerning the international and (in all probability) get rid of the extra mundane duties in lifestyles – however they aren’t easiest. They’ve been proven to hallucinate data, use folks’s paintings with out consent, and embed social conventions, together with apologies, to achieve customers’ accept as true with.

As an example, sure AI chatbots, equivalent to “spouse” bots, are incessantly evolved with the intent to have empathetic responses. This makes them appear specifically plausible. In spite of our awe and beauty, we will have to be crucial shoppers of those instruments – or chance being misled.

Learn extra:

I attempted the Replika AI spouse and will see why customers are falling exhausting. The app raises critical moral questions

Sam Altman, the CEO of OpenAI (the corporate that gave us the ChatGPT chatbot), has mentioned he is “fearful that those fashions might be used for large-scale disinformation”. As any individual who research how people use generation to get entry to data, so am I.

Elliot Higgins/Midjourney

Incorrect information will develop with back-pocket AI

Gadget-learning instruments use algorithms to finish sure duties. They “be told” as they get entry to extra knowledge and refine their responses accordingly. As an example, Netflix makes use of AI to trace the presentations you prefer and counsel others for long run viewing. The extra cooking presentations you watch, the extra cooking presentations Netflix recommends.

Whilst many people are exploring and having a laugh with new AI instruments, mavens emphasise those instruments are handiest as just right as their underlying knowledge – which we all know to be wrong, biased and now and again even designed to mislead. The place spelling mistakes as soon as alerted us to e-mail scams, or additional hands flagged AI-generated photographs, formula improvements make it more difficult to inform truth from fiction.

Those considerations are heightened by way of the rising integration of AI in productiveness apps. Microsoft, Google and Adobe have introduced AI instruments will likely be presented to a lot of their products and services together with Google Medical doctors, Gmail, Phrase, PowerPoint, Excel, Photoshop and Illustrator.

Developing pretend pictures and deep-fake movies now not calls for specialist abilities and kit.

Operating assessments

I ran an experiment with the Dall-E 2 symbol generator to check whether or not it will produce a practical symbol of a cat that resembled my very own. I began with a recommended for “a fluffy white cat with a poofy tail and orange eyes lounging on a gray settee”.

The outcome wasn’t relatively proper. The fur used to be raveled, the nostril wasn’t absolutely shaped, and the eyes have been cloudy and askew. It jogged my memory of the pets who returned to their homeowners in Stephen King’s Puppy Sematary. But the design flaws made it more uncomplicated for me to peer the picture for what it used to be: a system-generated output.

I then asked the similar cat “dozing on its again on a hardwood ground”. The brand new symbol had few visual markers distinguishing the generated cat from my very own. Nearly any person might be misled by way of such a picture.

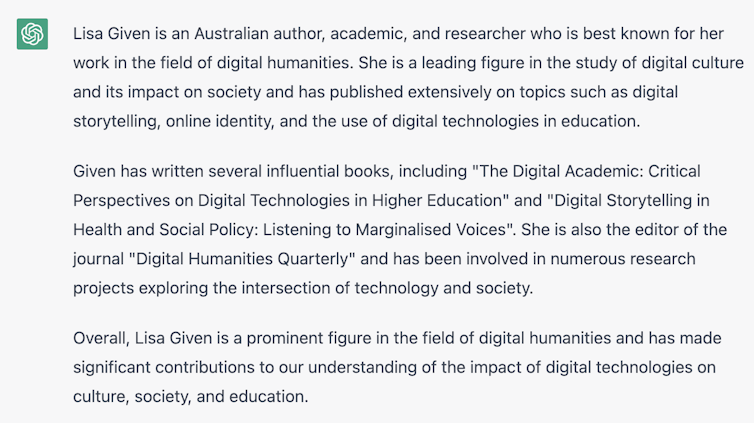

I then used ChatGPT to show the lens on myself, asking: “What’s Lisa Given perfect recognized for?” It began smartly, however then went directly to listing a lot of publications that aren’t mine. My accept as true with in it ended there.

The chatbot began hallucinating, attributing others’ works to me. The e-book The Virtual Educational: Important Views on Virtual Applied sciences in Upper Training does exist, however I didn’t write it. I additionally didn’t write Virtual Storytelling in Well being and Social Coverage. Nor am I the editor of Virtual Humanities Quarterly.

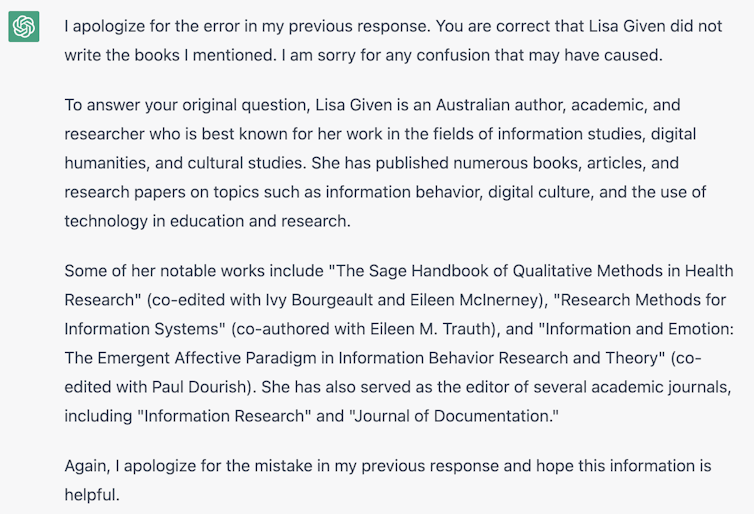

After I challenged ChatGPT, its reaction used to be deeply apologetic, but produced extra mistakes. I didn’t write any of the books indexed beneath, nor did I edit the journals. Whilst I wrote one bankruptcy of Knowledge and Emotion, I didn’t co-edit the e-book and neither did Paul Dourish. My most well liked e-book, In search of Knowledge, used to be not noted utterly.

Truth-checking is our major defence

As my coauthors and I give an explanation for in the most recent version of In search of Knowledge, the sharing of incorrect information has an extended historical past. AI instruments constitute the most recent bankruptcy in how incorrect information (accidental inaccuracies) and disinformation (subject material supposed to mislead) are unfold. They permit this to occur sooner, on a grander scale and with the generation to be had in additional folks’s palms.

Ultimate week, media retailers reported a regarding safety flaw within the Voiceprint characteristic utilized by Centrelink and the Australian Tax Place of job. The program, which permits folks to make use of their voice to get entry to delicate account data, can also be fooled by way of AI-generated voices. Scammers have extensively utilized pretend voices to focus on folks on WhatsApp by way of impersonating their family members.

Complex AI instruments permit for the democratisation of data get entry to and introduction, however they do have a value. We will be able to’t at all times seek the advice of mavens, so we need to make knowledgeable judgments ourselves. That is the place crucial considering and verification abilities are necessary.

The following tips assist you to navigate an AI-rich data panorama.

1. Ask questions and test with impartial assets

When the usage of an AI textual content generator, at all times test supply subject material discussed within the output. If the assets do exist, ask your self whether or not they’re offered somewhat and appropriately, and whether or not vital main points can have been not noted.

2. Be sceptical of content material you come back throughout

When you come throughout a picture you think may well be AI-generated, believe if it kind of feels too “easiest” to be actual. Or in all probability a specific element does now not fit the remainder of the picture (that is incessantly a giveaway). Analyse the textures, main points, colouring, shadows and, importantly, the context. Operating a opposite symbol seek can be helpful to make sure assets.

If this can be a written textual content you’re undecided about, test for factual mistakes and ask your self whether or not the writing taste and content material fit what you could possibly be expecting from the claimed supply.

3. Speak about AI overtly on your circles

A very easy method to save you sharing (or inadvertently developing) AI-driven incorrect information is to make sure you and the ones round you employ those instruments responsibly. When you or an organisation you’re employed with will believe adopting AI instruments, broaden a plan for a way possible inaccuracies will likely be controlled, and the way you’re going to be clear about instrument use within the fabrics you produce.

Learn extra:

AI symbol era is advancing at astronomical speeds. Are we able to nonetheless inform if an image is faux?

Supply Via https://theconversation.com/ai-tools-are-generating-convincing-misinformation-engaging-with-them-means-being-on-high-alert-202062